What Makes Wan 2.2 Different from Wan 2.1

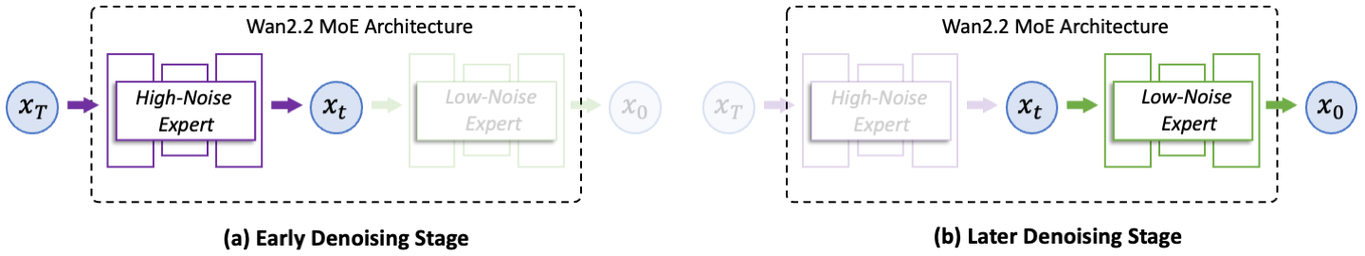

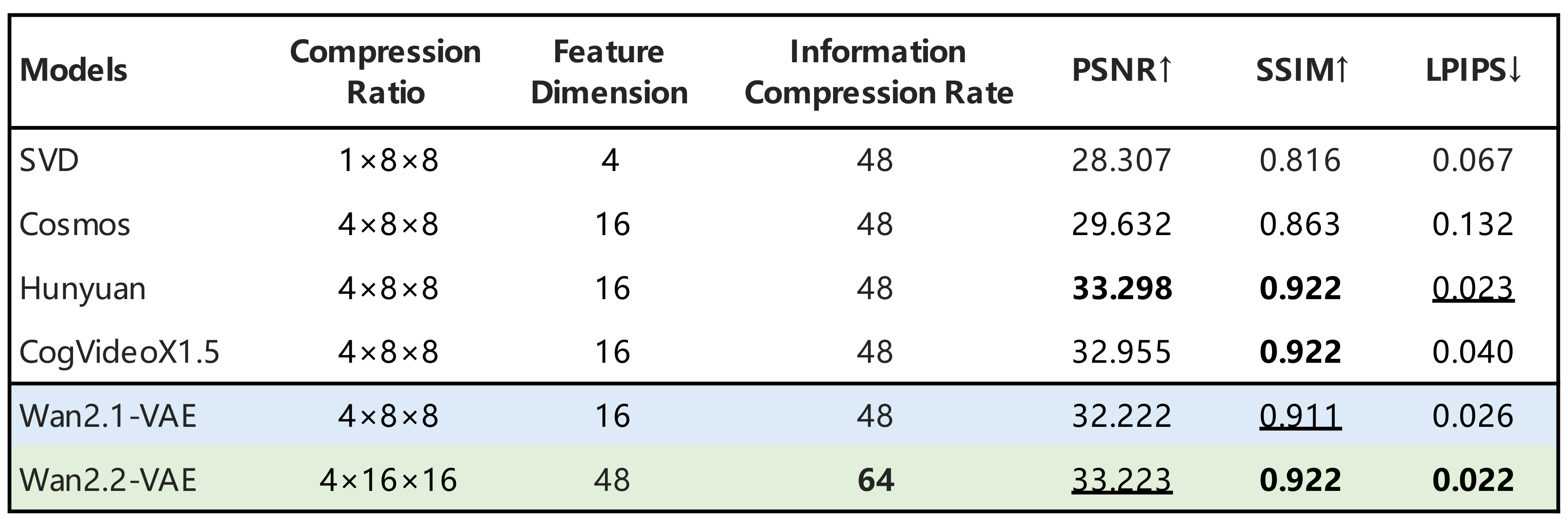

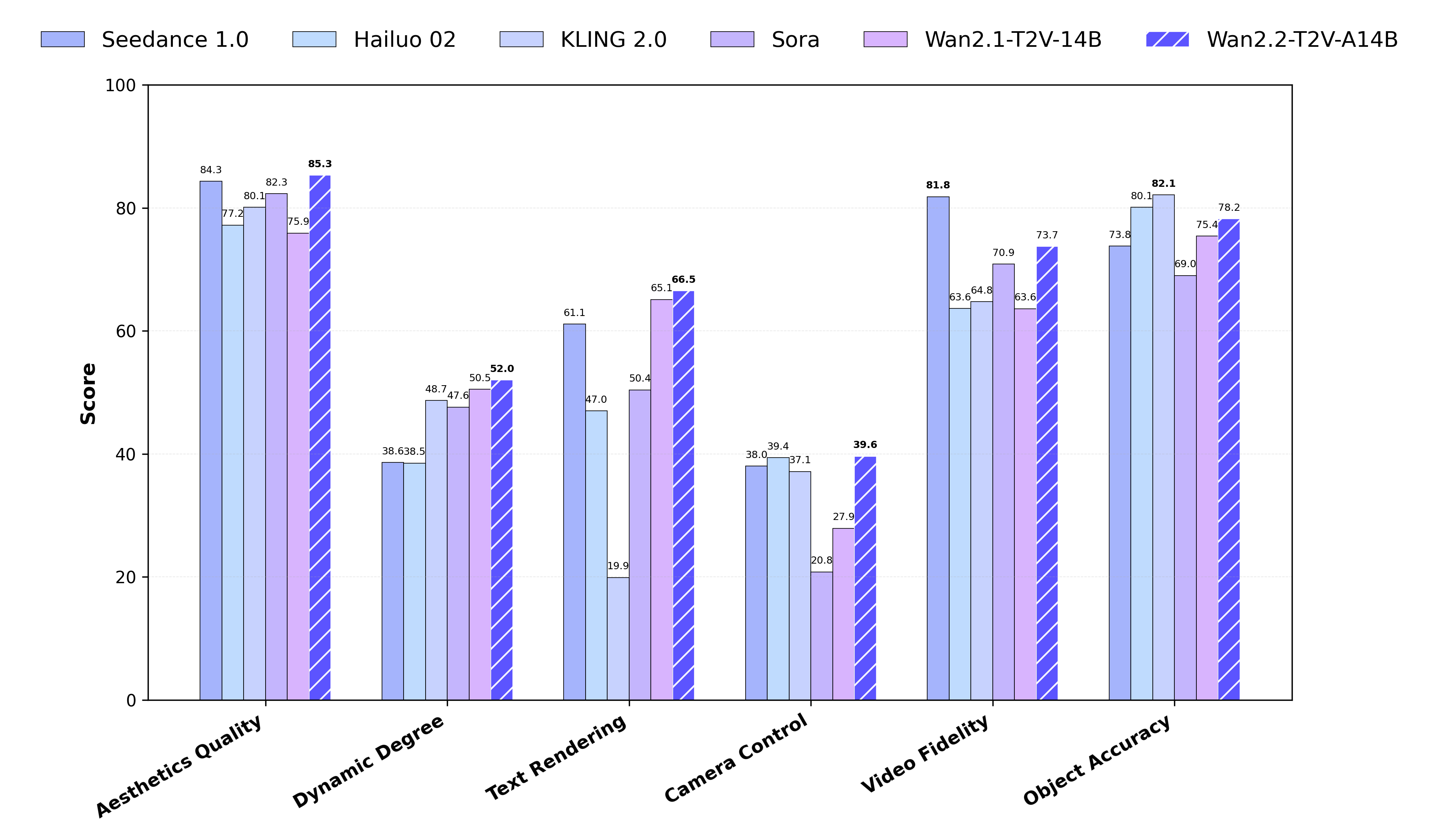

Wan 2.2 is a next-generation AI video generator developed by Wan AI, a research team affiliated with Alibaba. Designed for text-to-video and image-to-video tasks, it produces high-quality, cinematic videos with faster speed and more realistic motion. Compared to Wan 2.1, this version features a powerful Mixture of Experts (MoE) architecture, enabling smoother generation, better prompt alignment, and stronger visual control.